import pandas as pd

import torch

from torchinfo import summary

import numpy as np

# for embedding visualization later

import plotly.express as px

import plotly.io as pio

# for VSCode plotly rendering

# pio.renderers.default = "plotly_mimetype+notebook"

# for appearance

pio.templates.default = "plotly_white"

# for train-test split

from sklearn.model_selection import train_test_split

# for suppressing bugged warnings from torchinfo

import warnings

warnings.filterwarnings("ignore", category = UserWarning)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")Text Classification and Word Embedding

Open these notes in Google Colab

Open the live version in Google Colab

Open the 2nd live version in Google Colab

Major components of this set of lecture notes are based on the Text Classification tutorial from the PyTorch documentation.

Deep Text Classification and Word Embedding

In this set of notes, we’ll discuss the problem of text classification. Text classification is a common problem in which we aim to classify pieces of text into different categories. These categories might be about:

- Subject matter: is this news article about news, fashion, finance?

- Emotional valence: is this tweet happy or sad? Excited or calm? This particular class of questions is so important that it has its own name: sentiment analysis.

- Automated content moderation: is this Facebook comment a possible instance of abuse or harassment? Is this Reddit thread promoting violence? Is this email spam?

We saw text classification previously when we first considered the problem of vectorizing pieces of text. We are now going to look at a somewhat more contemporary approach to text using word embeddings.

For this example, we are going to use a data set containing headlines from a large number of different news articles on the website HuffPost. I retrieved this data from Kaggle.

# access the data

url = "https://raw.githubusercontent.com/PhilChodrow/PIC16B/master/datasets/news/News_Category_Dataset_v2.json"

df = pd.read_json(url, lines=True)

df = df[["category", "headline"]]There are over 200,000 headlines listed here, along with the category in which they appeared on the website.

df.head()| category | headline | |

|---|---|---|

| 0 | CRIME | There Were 2 Mass Shootings In Texas Last Week... |

| 1 | ENTERTAINMENT | Will Smith Joins Diplo And Nicky Jam For The 2... |

| 2 | ENTERTAINMENT | Hugh Grant Marries For The First Time At Age 57 |

| 3 | ENTERTAINMENT | Jim Carrey Blasts 'Castrato' Adam Schiff And D... |

| 4 | ENTERTAINMENT | Julianna Margulies Uses Donald Trump Poop Bags... |

Our task will be to teach an algorithm to classify headlines by predicting the category based on the text of the headline.

Training a model on this much text data can require a lot of time, so we are going to simplify the problem a little bit, by reducing the number of categories. Let’s take a look at which categories we have:

df.groupby("category").size()category

ARTS 1509

ARTS & CULTURE 1339

BLACK VOICES 4528

BUSINESS 5937

COLLEGE 1144

COMEDY 5175

CRIME 3405

CULTURE & ARTS 1030

DIVORCE 3426

EDUCATION 1004

ENTERTAINMENT 16058

ENVIRONMENT 1323

FIFTY 1401

FOOD & DRINK 6226

GOOD NEWS 1398

GREEN 2622

HEALTHY LIVING 6694

HOME & LIVING 4195

IMPACT 3459

LATINO VOICES 1129

MEDIA 2815

MONEY 1707

PARENTING 8677

PARENTS 3955

POLITICS 32739

QUEER VOICES 6314

RELIGION 2556

SCIENCE 2178

SPORTS 4884

STYLE 2254

STYLE & BEAUTY 9649

TASTE 2096

TECH 2082

THE WORLDPOST 3664

TRAVEL 9887

WEDDINGS 3651

WEIRD NEWS 2670

WELLNESS 17827

WOMEN 3490

WORLD NEWS 2177

WORLDPOST 2579

dtype: int64Some of these categories are a little odd:

- “Women”?

- “Weird News”?

- What’s the difference between “Style,” “Style & Beauty,” and “Taste”? ).

- “Parenting” vs. “Parents”?

- Etc?…

Well, there are definitely some questions here! Let’s just choose a few categories, and discard the rest. We’re going to give each of the categories an integer that we’ll use to encode the category in the target variable.

categories = {

"STYLE" : 0,

"SCIENCE" : 1,

"TECH" : 2

}

df = df[df["category"].apply(lambda x: x in categories.keys())]

df.head()| category | headline | |

|---|---|---|

| 137 | TECH | Facebook Accused Of Reading Texts And Accessin... |

| 138 | TECH | Self-Driving Uber In Fatal Accident Had 6 Seco... |

| 155 | SCIENCE | Scientists Turn To DNA Technology To Search Fo... |

| 272 | TECH | Instagram Is Adding A 'Mute' Button For The Sa... |

| 285 | SCIENCE | Unusual Asteroid Could Be An Interstellar Gues... |

df["category"] = df["category"].apply(categories.get)

df| category | headline | |

|---|---|---|

| 137 | 2 | Facebook Accused Of Reading Texts And Accessin... |

| 138 | 2 | Self-Driving Uber In Fatal Accident Had 6 Seco... |

| 155 | 1 | Scientists Turn To DNA Technology To Search Fo... |

| 272 | 2 | Instagram Is Adding A 'Mute' Button For The Sa... |

| 285 | 1 | Unusual Asteroid Could Be An Interstellar Gues... |

| ... | ... | ... |

| 200844 | 2 | Google+ Now Open for Teens With Some Safeguards |

| 200845 | 2 | Web Wars |

| 200846 | 2 | First White House Chief Technology Officer, An... |

| 200847 | 2 | Watch The Top 9 YouTube Videos Of The Week |

| 200848 | 2 | RIM CEO Thorsten Heins' 'Significant' Plans Fo... |

6514 rows × 2 columns

Next we need to wrap this Pandas dataframe as a Torch data set. While we’ve been using pre-implemented Torch classes for things like directories of images, in this case it’s not so hard to just implement our own Dataset. We just need to implement __getitem__() to return the appropriate row of the dataframe.

from torch.utils.data import Dataset, DataLoader

class TextDataFromDF(Dataset):

def __init__(self, df):

self.df = df

def __getitem__(self, index):

return self.df.iloc[index, 1], self.df.iloc[index, 0]

def __len__(self):

return len(self.df) Now let’s perform a train-validation split and make Datasets from each one.

df_train, df_val = train_test_split(df,shuffle = True, test_size = 0.2)train_data = TextDataFromDF(df_train)

val_data = TextDataFromDF(df_val)Each element of our data sets is a tuple of text and label:

train_data[194]('Customized Costume Jewelry (#ArtofY)', 0)Text Vectorization (Again)

Now we need to vectorize our text. This time, we’re not going to use one-hot encodings. Instead, we are going to treat each sentence as a sequence of words, and identify each word via an integer index. First we’ll use a tokenizer to split each sentence into individual words:

from torchtext.data.utils import get_tokenizer

from torchtext.vocab import build_vocab_from_iterator

tokenizer = get_tokenizer('basic_english')

tokenized = tokenizer(train_data[194][0])

tokenized['customized', 'costume', 'jewelry', '(', '#artofy', ')']You might reasonably disagree about whether this is a good tokenization: should punctuation marks be included? Should “you’re” really have become “you”, “’”, and “re”? These are excellent questions that we won’t discuss too much further right now.

We’re now ready to build a vocabulary. A vocabulary is a mapping from words to integers. The code below loops through the training data and uses it to build such a mapping.

def yield_tokens(data_iter):

for text, _ in data_iter:

yield tokenizer(text)

vocab = build_vocab_from_iterator(yield_tokens(train_data), specials=["<unk>"])

vocab.set_default_index(vocab["<unk>"])Here are the first couple elements of the vocabulary:

vocab.get_itos()[0:10]['<unk>', "'", 'the', 'to', ',', 's', 'of', 'in', 'a', 'is']This vocabulary can be applied on a list of tokens like this:

vocab(tokenized)[5589, 1075, 764, 25, 4374, 26]Batch Collation

Now we’re ready to construct the function that is going to actually pass a batch of data to our training loop. Here are the main steps:

- We pull some feature data (i.e. a batch of headlines).

- We represent each headline as a sequence of integers using the

vocab. - We pad the headlines with an unused integer index if necessary so that all headlines have the same length. This index corresponds to “blank” or “no words in this slot.”

- We return the batch of headlines as a consolidated tensor.

max_len = 30

num_tokens = len(vocab.get_itos())

def text_pipeline(x):

tokens = vocab(tokenizer(x))

y = torch.zeros(max_len, dtype=torch.int64) + num_tokens

if len(tokens) > max_len:

tokens = tokens[0:max_len]

y[0:len(tokens)] = torch.tensor(tokens,dtype=torch.int64)

return y

label_pipeline = lambda x: int(x)text_pipeline("we can't believe")tensor([ 36, 33, 1, 30, 667, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618])def collate_batch(batch):

label_list, text_list = [], []

for (_text, _label) in batch:

# add label to list

label_list.append(label_pipeline(_label))

# add text (as sequence of integers) to list

processed_text = text_pipeline(_text)

text_list.append(processed_text)

label_list = torch.tensor(label_list, dtype=torch.int64)

text_list = torch.stack(text_list)

return label_list.to(device), text_list.to(device)train_loader = DataLoader(train_data, batch_size=8, shuffle=True, collate_fn=collate_batch)

val_loader = DataLoader(val_data, batch_size=8, shuffle=True, collate_fn=collate_batch)Let’s take a look at a batch of data now:

next(iter(train_loader))(tensor([2, 2, 2, 1, 1, 1, 1, 0]),

tensor([[ 2, 1, 1524, 1, 2745, 3064, 11, 1, 230, 436, 1004, 1,

67, 14, 28, 7, 31, 208, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[6919, 7111, 4, 2, 274, 7, 2, 38, 6311, 433, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[4289, 106, 2199, 2083, 4, 832, 90, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[8116, 1, 4509, 908, 1245, 825, 2207, 8253, 384, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[ 2, 1660, 473, 27, 1, 5, 1836, 3, 431, 2732, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[ 44, 75, 407, 1825, 23, 3736, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[ 166, 2, 3299, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618],

[ 75, 23, 2, 1074, 762, 36, 1, 393, 132, 518, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618, 9618,

9618, 9618, 9618, 9618, 9618, 9618]]))The first element is the list of labels. The second is the concatenated sequence of integers representing 8 headlines worth of text. The final one is the list of offsets that tells us where each of the 8 headlines begins.

Modeling

Word Embedding

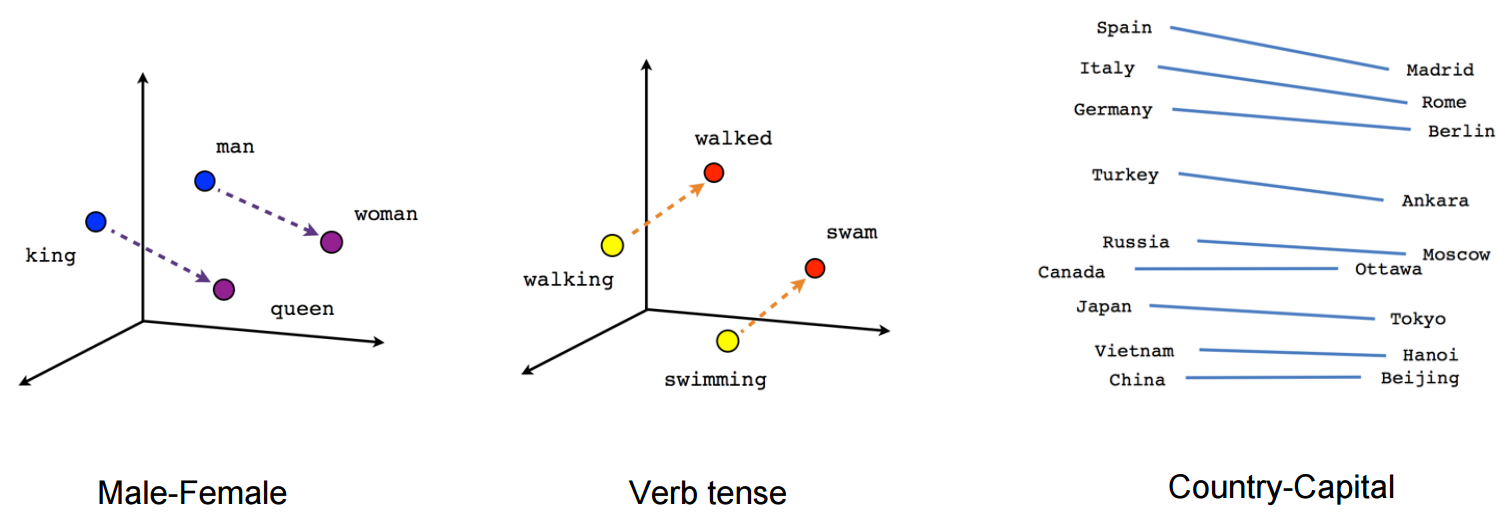

A word embedding refers to a representation of a word in a vector space. Each word is assigned an individual vector. The general aim of a word embedding is to create a representation such that words with related meanings are close to each other in a vector space, while words with different meanings are farther apart. One usually hopes for the directions connecting words to be meaningful as well. Here’s a nice diagram illustrating some of the general concepts:

Image credit: Towards Data Science

Word embeddings are often produced as intermediate stages in many machine learning algorithms. In our case, we’re going to add an embedding layer at the very base of our model. We’ll allow the user to flexibly specify the number of dimensions.

We’ll typically expect pretty low-dimensional embeddings for this lecture, but state-of-the-art embeddings will typically have a much higher number of dimensions. For example, the Embedding Projector demo supplied by TensorFlow uses a default dimension of 200.

from torch import nn

class TextClassificationModel(nn.Module):

def __init__(self,vocab_size, embedding_dim, max_len, num_class):

super().__init__()

self.embedding = nn.Embedding(vocab_size+1, embedding_dim)

self.fc = nn.Linear(max_len*embedding_dim, num_class)

def forward(self, x):

x = self.embedding(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return(x)Let’s learn and train a model!

vocab_size = len(vocab)

embedding_dim = 3

model = TextClassificationModel(vocab_size, embedding_dim, max_len, 3).to(device)INPUT_SHAPE = (1,max_len)

summary(model, INPUT_SHAPE, dtypes=[torch.long])==========================================================================================

Layer (type:depth-idx) Output Shape Param #

==========================================================================================

TextClassificationModel [1, 3] --

├─Embedding: 1-1 [1, 30, 3] 28,857

├─Linear: 1-2 [1, 3] 273

==========================================================================================

Total params: 29,130

Trainable params: 29,130

Non-trainable params: 0

Total mult-adds (M): 0.03

==========================================================================================

Input size (MB): 0.00

Forward/backward pass size (MB): 0.00

Params size (MB): 0.12

Estimated Total Size (MB): 0.12

==========================================================================================import time

optimizer = torch.optim.Adam(model.parameters(), lr=.1)

loss_fn = torch.nn.CrossEntropyLoss()

def train(dataloader):

epoch_start_time = time.time()

# keep track of some counts for measuring accuracy

total_acc, total_count = 0, 0

log_interval = 300

start_time = time.time()

for idx, (label, text) in enumerate(dataloader):

# zero gradients

optimizer.zero_grad()

# form prediction on batch

predicted_label = model(text)

# evaluate loss on prediction

loss = loss_fn(predicted_label, label)

# compute gradient

loss.backward()

# take an optimization step

optimizer.step()

# for printing accuracy

total_acc += (predicted_label.argmax(1) == label).sum().item()

total_count += label.size(0)

print(f'| epoch {epoch:3d} | train accuracy {total_acc/total_count:8.3f} | time: {time.time() - epoch_start_time:5.2f}s')

# print('| end of epoch {:3d} | time: {:5.2f}s | '.format(epoch,

# time.time() - epoch_start_time))

def evaluate(dataloader):

total_acc, total_count = 0, 0

with torch.no_grad():

for idx, (label, text) in enumerate(dataloader):

predicted_label = model(text)

total_acc += (predicted_label.argmax(1) == label).sum().item()

total_count += label.size(0)

return total_acc/total_countEPOCHS = 10

for epoch in range(1, EPOCHS + 1):

train(train_loader)| epoch 1 | train accuracy 0.603 | time: 0.82s

| epoch 2 | train accuracy 0.857 | time: 0.91s

| epoch 3 | train accuracy 0.927 | time: 0.81s

| epoch 4 | train accuracy 0.941 | time: 0.96s

| epoch 5 | train accuracy 0.962 | time: 0.82s

| epoch 6 | train accuracy 0.976 | time: 0.83s

| epoch 7 | train accuracy 0.974 | time: 1.00s

| epoch 8 | train accuracy 0.978 | time: 0.94s

| epoch 9 | train accuracy 0.982 | time: 0.81s

| epoch 10 | train accuracy 0.981 | time: 1.22sevaluate(val_loader)0.8173445894090561Our accuracy on validation data is much lower than what we achieved on the training data. This is a possible sign of overfitting. Regardless, this predictive performance is much better than what we would have achieved by guesswork:

df_train.groupby("category").size() / len(df_train)category

0 0.345231

1 0.334101

2 0.320668

dtype: float64Inspecting Word Embeddings

Recall from our discussion of image classification that the intermediate layers learned by the model can help us understand the representations that the model uses to construct its final outputs. In the case of word embeddings, we can simply extract this matrix from the corresponding layer of the model:

embedding_matrix = model.embedding.cpu().weight.data.numpy()Let’s also extract the words from our vocabular:

tokens = vocab.get_itos()The weight matrix itself has 16 columns, which is too many for us to conveniently visualize. So, instead we are going to use our friend PCA to extract a 2-dimensional representation that we can plot.

from sklearn.decomposition import PCA

pca = PCA(n_components=2)

weights = pca.fit_transform(embedding_matrix)We’ll use the Plotly package to do the plotting. Plotly works best with dataframes:

tokens = vocab.get_itos()

tokens.append(" ")

embedding_df = pd.DataFrame({

'word' : tokens,

'x0' : weights[:,0],

'x1' : weights[:,1]

})

embedding_df| word | x0 | x1 | |

|---|---|---|---|

| 0 | <unk> | 0.074547 | -1.060667 |

| 1 | ' | 0.639172 | 0.628451 |

| 2 | the | 0.948153 | 0.196997 |

| 3 | to | 1.069958 | 2.498176 |

| 4 | , | 2.831909 | 0.761538 |

| ... | ... | ... | ... |

| 9614 | ‘tail | -5.572474 | 0.168821 |

| 9615 | ‘what | -0.293221 | 3.162487 |

| 9616 | ’90s | 2.491629 | 1.970572 |

| 9617 | 💯 | 4.792400 | -0.721765 |

| 9618 | -0.130364 | 0.241826 |

9619 rows × 3 columns

And, let’s plot! We’ve used Plotly for the interactivity: hover over a dot to see the word it corresponds to.

fig = px.scatter(embedding_df,

x = "x0",

y = "x1",

size = list(np.ones(len(embedding_df))),

size_max = 10,

hover_name = "word")

fig.show()We’ve made an embedding! We might notice that this embedding appears to be a little bit “stretched out” in three main directions. Each one corresponds to one of the three classes in our training data.

Bias in Text Embeddings

Whenever we create a machine learning model that might conceivably have impact on the thoughts or actions of human beings, we have a responsibility to understand the limitations and biases of that model. Biases can enter into machine learning models through several routes, including the data used as well as choices made by the modeler along the way. For example, in our case:

- Data: we used data from a popular news source.

- Modeler choice: we only used data corresponding to a certain subset of labels.

With these considerations in mind, let’s see what kinds of words our model associates with female and male genders.

feminine = ["she", "her", "woman"]

masculine = ["he", "him", "man"]

highlight_1 = ["strong", "powerful", "smart", "thinking", "brave", "muscle"]

highlight_2 = ["hot", "sexy", "beautiful", "shopping", "children", "thin"]

def gender_mapper(x):

if x in feminine:

return 1

elif x in masculine:

return 4

elif x in highlight_1:

return 3

elif x in highlight_2:

return 2

else:

return 0

embedding_df["highlight"] = embedding_df["word"].apply(gender_mapper)

embedding_df["size"] = np.array(1.0 + 50*(embedding_df["highlight"] > 0))

#

sub_df = embedding_df[embedding_df["highlight"] > 0]import plotly.express as px

fig = px.scatter(sub_df,

x = "x0",

y = "x1",

color = "highlight",

size = list(sub_df["size"]),

size_max = 10,

hover_name = "word",

text = "word")

fig.update_traces(textposition='top center')

fig.show()What do you notice about some of the similarities represented in these embeddings? What do you wonder?

Representational Harm and Representational Bias

Earlier in this course, we discussed allocative bias. Allocative bias occurs when different groups have inequitable opportunities to access important resources or opportunities on the basis of their identity. We discussed examples that raised questions about equitable access to personal liberty, employment, and insurance.

Representational bias refers to the systematic cultural representation of marginalized groups in harmful ways, or of denying them cultural representation at all. The perpetuation of harmful stereotypes is perhaps the most well-known form of representational harm. Erasure is another form of representational harm in which representations or topics of interest to marginalized groups are suppressed.

Here’s a very recent example (from Margaret Mitchell) illustrating how representational gender bias shows up in ChatGPT:

I replicated this (my screenshot below).

— MMitchell ((mmitchell_ai?)) April 23, 2023

Really great example of gender bias, for those of you who need a canonical example to make the point. https://t.co/O1A8Tk7oI1 pic.twitter.com/hKt4HSBzh3

Another form of representational harm in ML systems is the famous historical tendency of Google Search to surface demeaning and offensive search results related to people of color. This tendency was studied by Dr. Safiya Noble in her book Algorithms of Oppression. In one of Dr. Nobel’s most famous examples, top results for the phrase “black girls” in 2011 consisted of links to porn sites, which did not hold true of searches for “white girls” or “black men.” As late as 2016, an image search for “gorillas” would surface pictures of Black individuals. You can find a brief synopsis of some of Dr. Noble’s findings here (content warning: highly sexually explicit language). Google has since taken steps to improve these specific examples.

Bias in Google Translate

It is well-documented that machine learning algorithms trained on natural text can inherit biases present in those texts. One of the most direct ways in which we can observe such bias is in Google Translate. Some languages, such as Hungarian, do not possess gendered pronouns. When Google Translate attempts to render these pronouns into a gendered language like English, assumptions are made, as pointed out in this Tweet by Dora Vargha. Let’s demonstrate with the following English sentences.

he cooks. she is a political leader. she is an engineer. he is a cleaner. he is beautiful. she is strong.

Translate these into Hungarian and back via Google Translate, and here’s what you’ll get:

she cooks. he is a political leader. he is an engineer. she is cleaning. she is beautiful. he is strong.

Considering that English has a gender neutral pronoun (they), this would be an easy item to fix, which Google has thus far declined to do.

Intersections of Representational and Allocative Harms

In some cases, representational and allocative harms can intersect and reinforce each other. For example, modern translation systems perform impressively in certain languages but much less well in others. Some of these languages, such as Pashto and Dari, are spoken by many refugees and asylum-seekers to the US. The use of automated translation software when processing these asylum cases has drawn considerable scrutiny and appears to have resulted in the denial of at least one case due to a machine translation error.

More on Bias in Language Models

For more on the topic of bias in language models, you may wish to read the now-infamous paper by Emily Bender, Angelina McMillan-Major, Timnit Gebru, and “Shmargret Shmitchell” (Margaret Mitchell), “On the Dangers of Stochastic Parrots.” This is the paper that ultimately led to the firing of the final two authors by Google in late 2020 and early 2021.

© Phil Chodrow, 2023